Project Overview

An open-source Streamlit application that validates API keys for various LLM providers and displays quota/usage information in a user-friendly dashboard.

View on GitHubIntroduction

In the rapidly evolving landscape of artificial intelligence, enterprise developers and AI engineers increasingly leverage multiple Large Language Model (LLM) providers to optimize performance, cost, and reliability. This multi-provider approach, while advantageous, introduces significant complexity in API key management. Each provider—whether OpenAI, Anthropic, Groq, or others—implements distinct authentication mechanisms, quota structures, and usage monitoring systems.

To address this critical infrastructure challenge, I developed the LLM API Key Validator—an enterprise-grade, open-source Streamlit application. This tool provides a unified interface for validating API credentials across numerous providers while delivering comprehensive quota and usage analytics through an intuitive dashboard. The solution significantly streamlines development workflows and enhances operational visibility for teams working with multiple AI services.

The Enterprise Challenge of Multi-Provider LLM Authentication

Organizations implementing AI solutions at scale encounter several critical operational challenges when interfacing with multiple LLM providers:

- Authentication Integrity Verification: Efficiently validating API key status without executing resource-intensive model calls or incurring unnecessary costs

- Resource Allocation Monitoring: Maintaining real-time visibility into quota consumption and credit balances across heterogeneous provider platforms

- Model Accessibility Mapping: Systematically identifying which specific models and capabilities are accessible with each authentication credential

- Rate Limitation Management: Comprehensively understanding and optimizing around the throughput constraints associated with each provider's API keys

- Enterprise-Scale Validation: Conducting efficient batch validation during system migrations, security audits, or organizational restructuring

These technical challenges are further amplified in enterprise environments with distributed development teams, shared authentication credentials, and complex multi-project architectures with varying API requirements and security protocols.

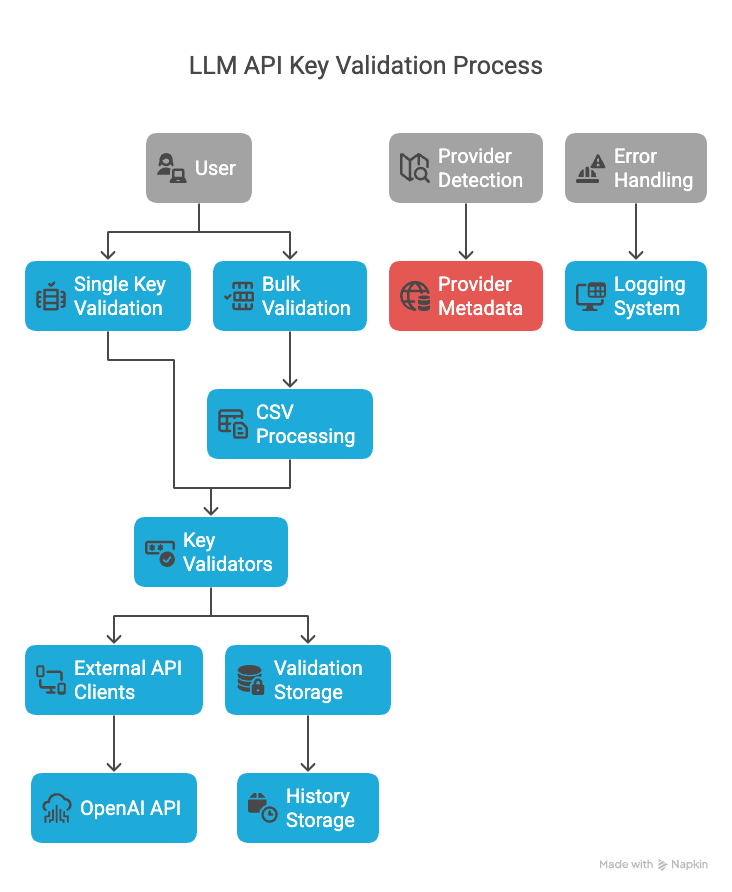

Enterprise-Grade Solution Architecture

The LLM API Key Validator implements a sophisticated multi-tier architecture that adheres to software engineering best practices—emphasizing separation of concerns, extensibility, and maintainability through strategic component isolation.

Architectural diagram illustrating the layered design pattern implementation

The system architecture comprises five distinct functional layers, each with clearly defined responsibilities:

- Presentation Layer: A responsive Streamlit-powered interface featuring dedicated modules for individual credential validation, batch processing operations, historical analysis, and comprehensive provider documentation

- Domain Layer: Robust abstract base classes establishing the core domain model for API credentials and validation services, providing a consistent interface across the system

- Service Layer: Provider-specific validator implementations that encapsulate the unique authentication protocols, endpoint structures, and response formats for each LLM service

- Infrastructure Layer: Specialized utility services handling cross-cutting concerns including provider detection algorithms, persistent storage operations, and comprehensive logging

- Persistence Layer: Structured JSON data stores maintaining provider specifications and validation audit trails with optimized read/write operations

Key Features

1. Multi-Provider Support

The application supports a wide range of LLM providers, including:

- OpenAI (GPT models)

- Anthropic (Claude models)

- Groq

- Mistral AI

- Cohere

- Google (Gemini models)

- OpenRouter

- Together AI

- Perplexity

- And many more...

Each provider has a dedicated validator that understands the specific API endpoints and response formats.

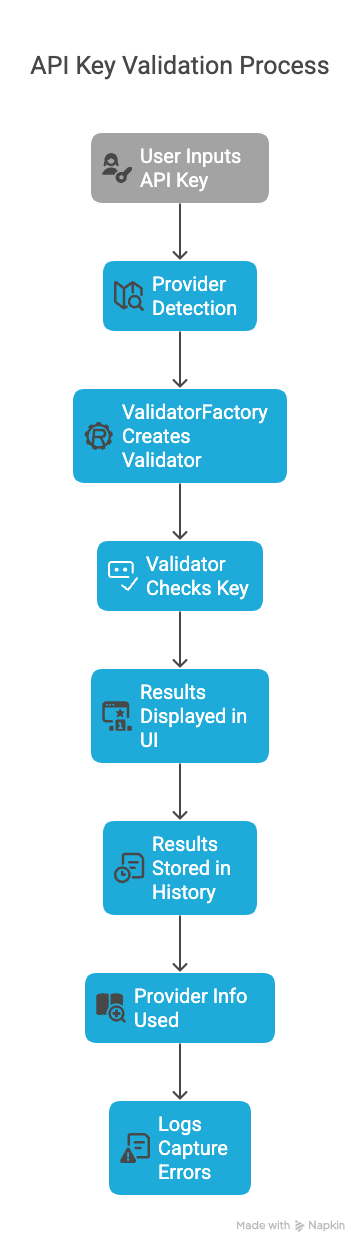

2. Automatic Provider Detection

One of the most powerful features is the automatic detection of the provider based on the API key format. This means users don't need to specify which provider they're validating - the application figures it out automatically.

The API validation process flow showing automatic provider detection

3. Comprehensive Validation Results

For each validated key, the application provides detailed information:

- Validity status

- Available models and their capabilities

- Usage statistics and remaining credits

- Rate limits and token limits

- Account tier information

4. Bulk Validation

For teams managing multiple keys, the bulk validation feature allows uploading a CSV file with multiple API keys and validating them all at once. The results can be downloaded as a CSV for further analysis or record-keeping.

5. Validation History

The application maintains a history of all validations, making it easy to track changes in key status over time. This is particularly useful for monitoring usage patterns and detecting when keys are approaching their limits.

Technical Implementation

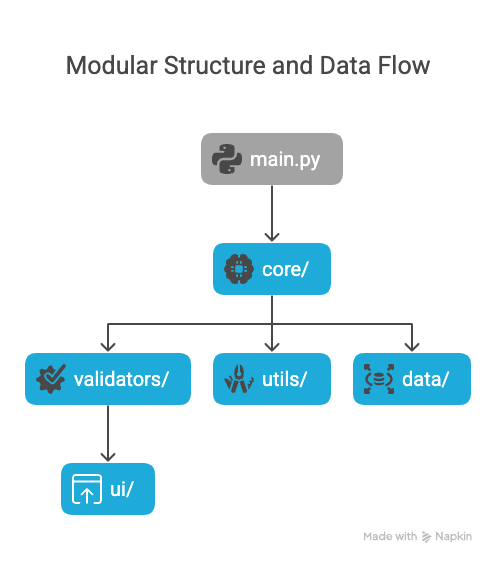

Modular Structure

The project follows a clean, modular structure that makes it easy to extend and maintain:

Modular structure showing the organization of the codebase

Asynchronous API Calls

To improve performance, especially during bulk validation, the application uses asynchronous API calls with aiohttp. This allows multiple validations to run concurrently, significantly reducing the total validation time.

async def validate(self, api_key: APIKey) -> APIKey:

"""

Validate the API key against the provider's API.

Args:

api_key: The API key to validate

Returns:

The validated API key with updated information

"""

if not isinstance(api_key, OpenAIKey):

return api_key

url = "https://api.openai.com/v1/models"

headers = {

"Authorization": f"Bearer {api_key.api_key}"

}

async with aiohttp.ClientSession() as session:

try:

async with session.get(url, headers=headers, timeout=10) as response:

if response.status == 200:

# Key is valid, extract model information

api_key.is_valid = True

api_key.message = "Valid API key"

# Parse models information

data = await response.json()

# Process model data...

else:

# Key is invalid

api_key.is_valid = False

api_key.message = f"Invalid API key: {response.status} {response.reason}"

except Exception as e:

api_key.is_valid = False

api_key.message = f"Error validating API key: {str(e)}"

return api_keyFactory Pattern for Validators

The application uses the factory pattern to create the appropriate validator for each provider. This makes it easy to add new providers without modifying existing code.

class ValidatorFactory:

"""Factory for creating validators for different providers."""

@staticmethod

def create_validator(provider: Provider) -> Validator:

"""

Create a validator for the specified provider.

Args:

provider: The provider to create a validator for

Returns:

A validator for the specified provider

"""

if provider == Provider.OPENAI:

return OpenAIValidator()

elif provider == Provider.ANTHROPIC:

return AnthropicValidator()

elif provider == Provider.MISTRAL:

return MistralValidator()

# Additional providers...

else:

raise ValueError(f"No validator available for provider: {provider}")The factory pattern is particularly useful in this application because it allows for easy extension. When a new LLM provider emerges, you can simply add a new validator class and update the factory without changing any existing code. This follows the Open/Closed Principle: open for extension, closed for modification.

Lessons Learned

Building this project provided several valuable insights:

- API Inconsistency: Each provider has its own way of structuring APIs, response formats, and authentication methods. Creating a unified interface required careful abstraction.

- Rate Limiting Challenges: When validating multiple keys, it's important to respect rate limits to avoid being temporarily blocked by providers.

- Security Considerations: Handling API keys requires careful attention to security. The application never stores keys permanently unless explicitly requested by the user.

- UI/UX Balance: Creating a technical tool that remains user-friendly required thoughtful UI design and clear presentation of complex information.

Future Enhancements

The LLM API Key Validator is an ongoing project with several planned enhancements:

- Support for additional LLM providers as they emerge

- Enhanced visualization of usage patterns over time

- Integration with cost estimation tools to predict spending

- API endpoint for programmatic validation from other applications

- Team collaboration features for shared key management

Conclusion

The LLM API Key Validator addresses a critical need in the AI development ecosystem by providing a unified interface for managing API keys across multiple providers. By automating validation and presenting quota information in a clear, consistent format, it helps developers focus on building AI applications rather than managing infrastructure.

The project is open-source and available on GitHub. Contributions are welcome, whether in the form of new provider validators, feature enhancements, or documentation improvements.

As the AI landscape continues to evolve with new providers and models, tools like this will become increasingly important for developers working at the cutting edge of AI technology.